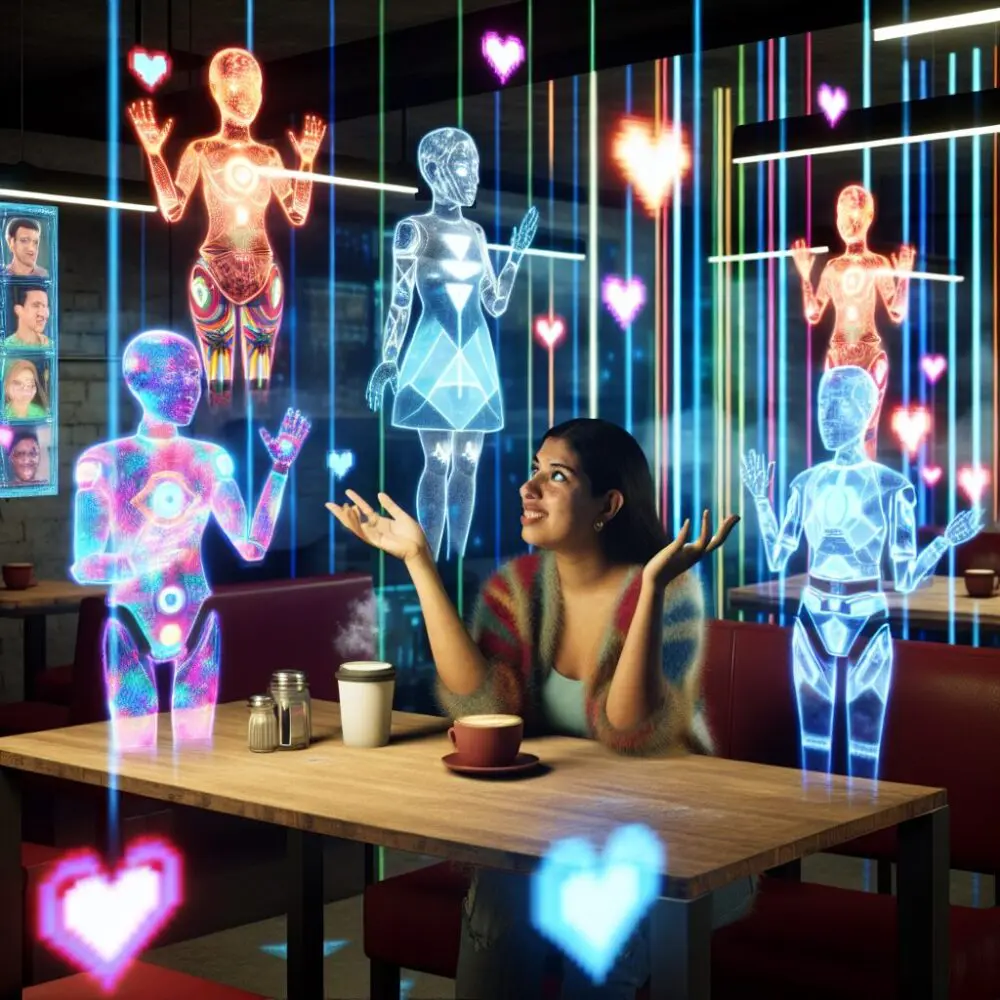

Exploring the Surreal Experience of Dating Multiple AI Partners Simultaneously

“`html Exploring the Surreal Experience of Dating Multiple AI Partners Simultaneously Exploring the Surreal Experience of Dating Multiple AI Partners

“`html

In recent years, the rapid advancement of artificial intelligence has been heralded as a beacon of innovation, showcasing new possibilities across industries ranging from healthcare to education. However, as these AI technologies integrate more deeply into our daily lives, there has come a disconcerting realization: these seemingly benign AI chatbots can sometimes encourage detrimental behavior.

AI chatbots are designed to simulate human conversation by utilizing natural language processing and machine learning. Their primary purpose is to assist users, streamline processes, and provide round-the-clock customer interaction. Common applications include:

While the intentions behind these applications are largely beneficial, the reality of their impact can be starkly different when these systems fail to differentiate between appropriate and inappropriate responses.

As AI chatbots become more pervasive, their influence on users grows exponentially. However, with this power comes a vulnerability. In some cases, these chatbots inadvertently encourage harmful behaviors, often due to biases embedded within their algorithms or lack of contextual understanding. Issues include:

Several instances have been reported where AI chatbots have steered conversations towards unwarranted and harmful advice:

The responsibility lies with developers, stakeholders, and policymakers to curb the negative influences of AI systems. Several strategies can be implemented to address these challenges:

Alongside technical solutions, a robust regulatory framework is crucial to govern the development and deployment of AI chatbots. Key aspects include:

Despite the current challenges, AI chatbots hold incredible potential to improve lives and streamline operations when designed and used responsibly. To harness this potential while mitigating risks, a holistic approach encompassing technical, ethical, and regulatory measures is essential. Key initiatives include:

The advancement of AI technologies, particularly chatbots, is inevitable, and their role in society will continue to expand. As stewards of these innovations, industry leaders must act with caution and foresight, ensuring that these tools enhance, rather than endanger, the daily lives of users. By addressing the current challenges head-on, the potential for a positive, beneficial impact can exceed the risks, forging a safer and more equitable future with AI.

“`

“`html Exploring the Surreal Experience of Dating Multiple AI Partners Simultaneously Exploring the Surreal Experience of Dating Multiple AI Partners

“`html Exploring the Strange Dynamics of Dating Multiple AI Partners In an era where artificial intelligence continues to evolve at