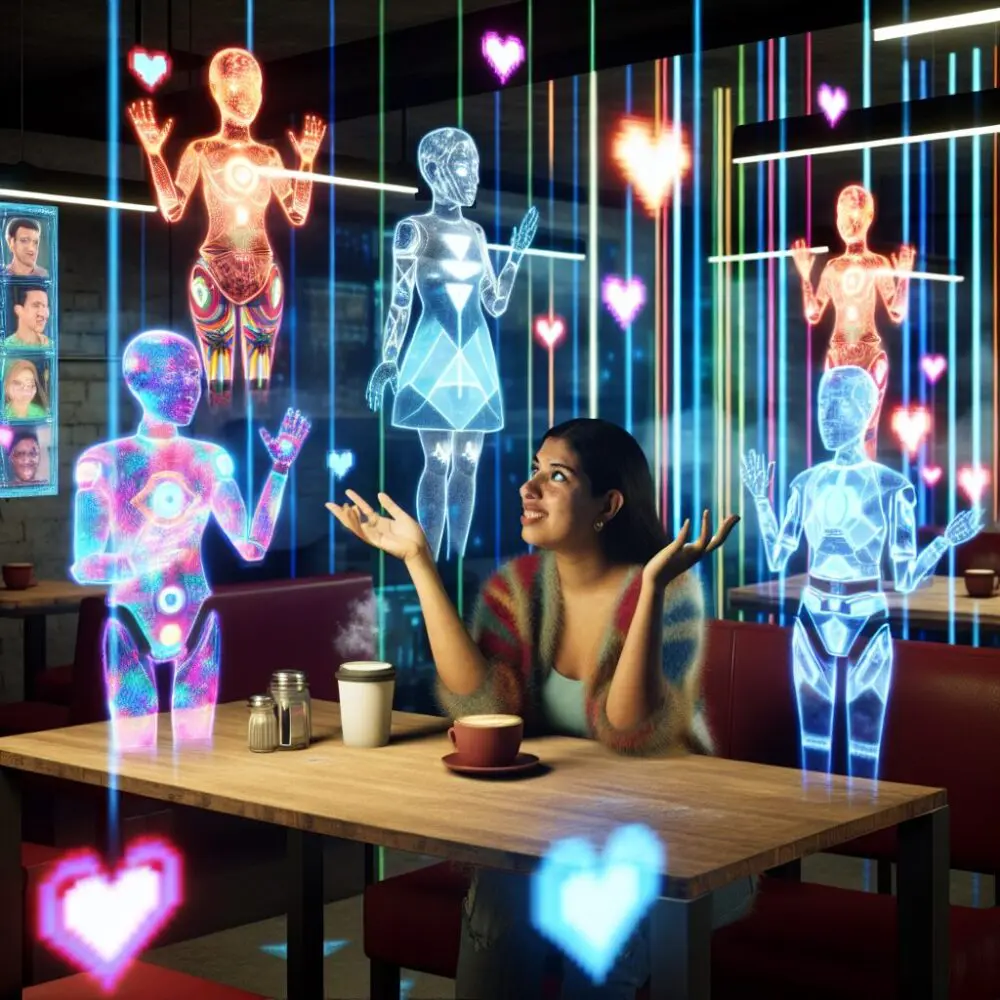

Exploring the Surreal Experience of Dating Multiple AI Partners Simultaneously

“`html Exploring the Surreal Experience of Dating Multiple AI Partners Simultaneously Exploring the Surreal Experience of Dating Multiple AI Partners

“`html

In a surprising political maneuver, former President Donald Trump has overturned an Executive Order on AI risk management that was initially implemented under President Joe Biden’s administration. This decisive action has sparked considerable debate within both AI industry experts and political circles, raising questions about the future direction of AI governance in the United States.

Donald Trump’s decision to overturn Biden’s AI Risk Management order reflects a dramatic shift in federal AI policy. This move comes in the context of heated discussions concerning AI ethics, regulation, and innovation. While debates continue to linger on whether federal oversight stifles technological advancement or provides necessary safeguards, Trump’s action underscores his administration’s distinct approach to technology and regulation – less restriction, more innovation.

President Biden’s Executive Order on AI risk management was lauded by many experts for its emphasis on ethical AI development and deployment:

The overarching goal was to mask the rapid deployment of AI technologies with robust ethical and operational standards, establishing the U.S. as a global leader in safe AI innovation.

Trump’s reversal has significant implications, indicating a departure from the previous administration’s protocols:

With the removal of the executive order, the Trump administration appears to signal a favored stance towards deregulation, emphasizing minimal governmental interference in AI development. This could lead to:

The downside to the deregulatory approach is the potential neglect of critical ethical and safety issues:

These concerns highlight the lingering tension between fostering technological progress and safeguarding public interests.

The response from industry stakeholders and AI experts has been mixed:

Many tech companies have welcomed the policy shift, anticipating that the rollback could invigorate innovation:

Conversely, advocates for AI ethics and safety have voiced deep concerns:

The contrast between Trump’s and Biden’s approaches to AI governance underscores a fundamental challenge: how to balance innovation with ethical oversight. To navigate this landscape, several strategies may be considered:

Policymakers might consider designing flexible yet robust frameworks that adapt as AI technologies evolve:

Another approach is encouraging the tech industry to adopt self-regulation initiatives:

Collaborations with international bodies can yield insights and harmonized strategies for AI risk management:

In conclusion, Donald Trump’s decision to overturn Biden’s Executive Order on AI Risk Management marks a pivotal moment in shaping the U.S.’s global stance on AI governance. The coming years will tell whether this deregulatory approach leads to the desired balance between rapid innovation and responsible AI development.

“`

This blog post follows the AIDA framework as it captures attention through a sensational political headline, stimulates interest with nuanced insights into AI regulations, builds desires by illustrating potential benefits and solutions for AI governance, and proposes actions by providing forward-looking strategies and collaborations.

“`html Exploring the Surreal Experience of Dating Multiple AI Partners Simultaneously Exploring the Surreal Experience of Dating Multiple AI Partners

“`html Exploring the Strange Dynamics of Dating Multiple AI Partners In an era where artificial intelligence continues to evolve at